As part of the July, 2019, Microsoft hackathon, the OrcaHello project was initiated through a collaboration of Microsoft volunteers led by Chris Hanke and Dr. David Bain of Orca Conservancy. Subsequently, and with increasing data access from the Orcasound hydrophone network and support from Microsoft’s AI for Earth program, this real-time inference system has been refined through collaborative efforts. Two teams that focused on separate projects involving orcas and machine learning in the 2019 Microsoft hackathon — the Pod.Cast annotation tool and the OrcaHello project — have combined efforts in pursuit of “AI for Orcas,” specifically a reliable real-time binary classifier for SRKW calls.

Cooperating during 2019-2021, the combined team launched an MVP of the live inference system in July, 2020, through the Microsoft hackathon. This ~3 year effort involved heroic dedication from ~25 Microsoft employees — all acting as volunteers within the 2020 July and 2021 October hackathon teams led by Prakruti Gogia, Akash Mahajan, Ayush Agrawal, Chris Hanke, Mike Cowan, Claire Goetschel, Michelle Yang, and Adele Bai. See the full list below in the LEAD CONTRIBUTORS AND COLLABORATORS section.

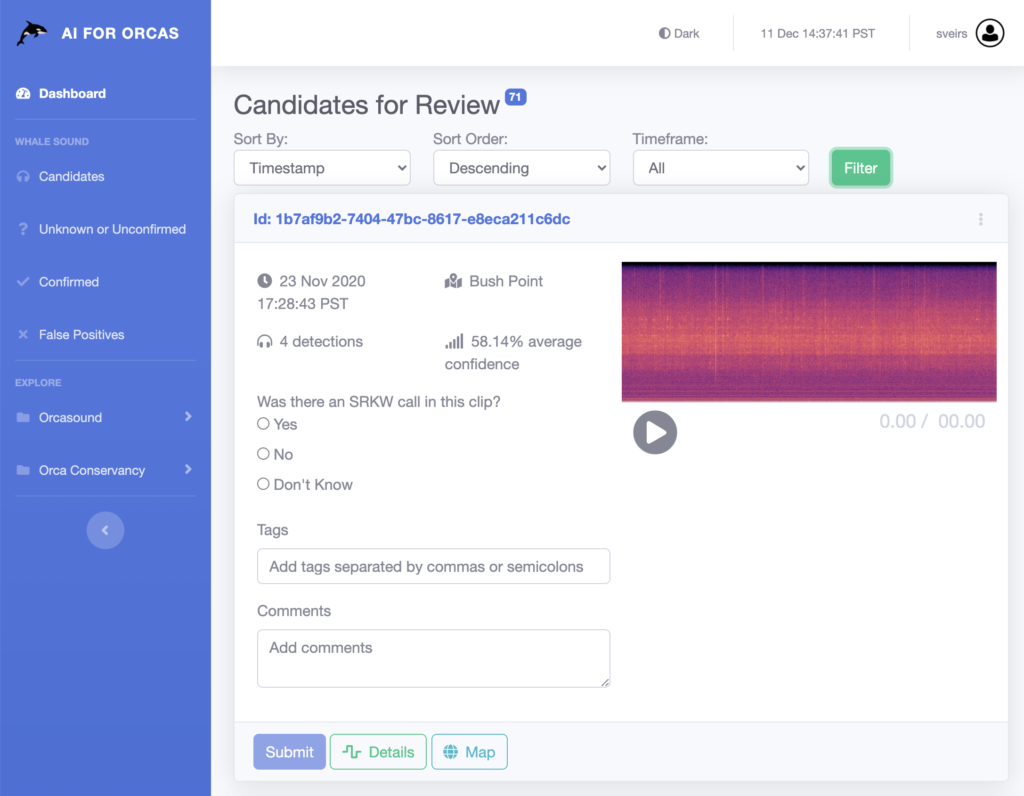

In September, 2020, beta-testing began with live data from a single Orcasound location with moderating bioacoustic experts Scott Veirs, David Bain, and Val Veirs. In November, the two other Orcasound hydrophone network locations were added to the beta-test. By April, 2021, preliminary results include ~1300 candidate detections (~60 detections per month for each of the 3 Orcasound hydrophone locations). Confirmed candidates include 211 true positives, 15 “unknown” SRKW-like signals, and 1075 false positives.

On-going development is led by Prakruti, Akash, and Aayush, mainly through AI4Earth & OneWeek hackathons, but also many weekend hacks and asynchronous work via Teams. In it’s current state, the live inference system uses a machine learning model to:

- ingest live audio data from Orcasound hydrophones via cloud-based storage;

- detect orca calls in short (2.45-second) data segments using a machine learning model;

- notify moderators and present 1-minute candidates via a moderation UI;

- validate labels based on moderator feedback; and,

- for true positive candidates issues notifications to subscribers.

Open-source code and open data

- orcaml repository (Github)

- aifororcas-livesystem repository (Github)

- Pod.Cast training and test data (metadata in Github wiki, with links to audio data in AWS/S3)

- Orcasound orcadata wiki (free, open access to live stream data and train/test sets)

- Pod.Cast rounds 1-3

- Coming soon: Pod.Cast rounds 4-10+ (watch the orcadata/wiki)

Implementations

- Live inference system with Orcasound data and a killer whale call model

Pytorch-based VGG-ish model trained on a global killer whale calls from the Watkins Marine Mammal Library, and then on multiple rounds of Southern Resident Killer Whale call data from Orcasound hydrophones. Transfer learning via Resnet-50 and data loading with FastAI. The front-end code is a Blazor Server App that enables moderators to confirm/deny candidate detections, and discuss them with community scientists or other bioacoustic experts. - API documentation (REST API for interacting with the AI For Orcas CosmosDB)

Presentations & products

Dec 2020: Meridian webinar on “Sound detection and classification with deep learning”

Talk by Prakruti Gogia, Akash Mahajan, and Aayush Agrawal on Wednesday, December 16, 2020.

Coming soon:

Podcast recorded in mid-2021

Lead contributors and collaborators

- Prakruti Gogia — Overall lead

- Mike Cowan — Moderation system, front end

- Claire Goetschel — Moderation system, design

- Akash Mahajan — Annotation system

- Aayush Agrawal— Machine learning development

- Chris Hanke — Public relations

- Hackathon partipants (part of at least one hackathon in 2019-2021+)

- Nithya Govindarajan

- Herman Wu

- Athapan Arayasantiparb

- Anurag Peshne

- Ming Zhong

- Adele Bai

- Michelle Yang

- Joyce Cahoun

- Kadrina Queyquep

- Trisha Hoy

- Rob Boucher

- Dmitrii Vasilev

- Morgane Austern

- Shahrzad Gholami

- Kunal Mehta

- Diego Rodríguez

- Kenneth Rawlings

- Bioacoustic experts (partners / annotators / beta-testers)

- David Bain (2019+, Orca Conservancy)

- Scott Veirs (2019+, Beam Reach, Orcasound)

- Val Veirs (2020+, Beam Reach, Orcasound)

- Monika Wieland Shields (2021+, Orca Behavior Institute, Orcasound)

* Note: this is volunteer-driven & is not an official product of Microsoft.

Support & credits

- Microsoft Garage on Twitter (organizers of MS Hack 2019)

- Microsoft AI for Earth

- $15k Azure credits and $15k labeling funds to Orca Conservancy (led by Dave Bain)

- $15k Azure credits to University of Washington and Orcasound (Valentina Staneva and Shima Abadi with Scott Veirs, Val Veirs, other Orcasound partners)

- Watkins Marine Mammal Sound Database, Woods Hole Oceanographic Institution (non-commercial academic or personal use of killer whale un/labeled recordings for initial Pod.Cast model training)

- Software: Pytorch | FastAI