Pod.Cast uses a machine learning model to accelerate annotation of biological signals in audio data through web-based crowdsourcing. This tool was used in an active learning style to => bootstrap from a raw model => iteratively create annotated training/test sets on audio from Orcasound hydrophones at orcadata/wiki.

Demo app: podcast.ai4orcas.net

The software lets a user:

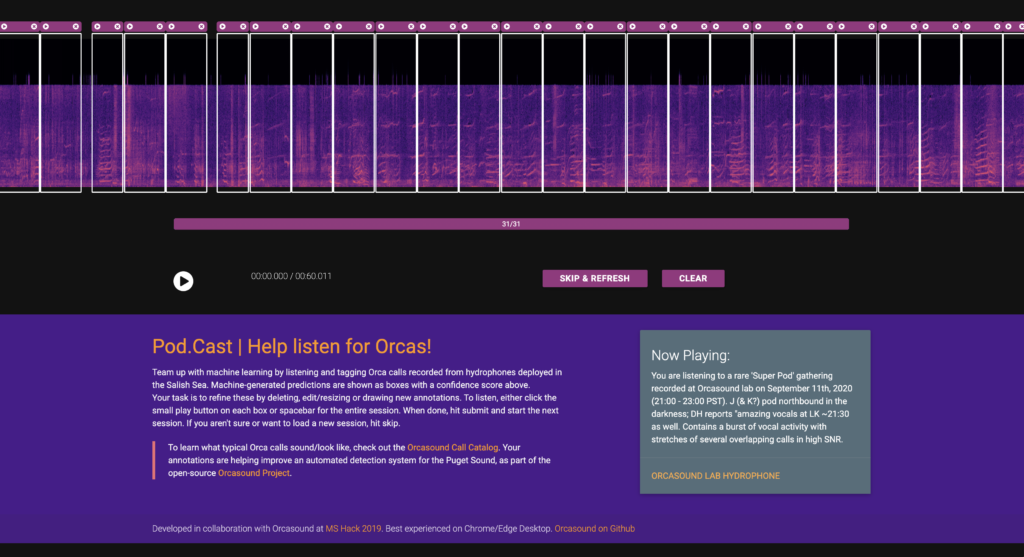

- Predict the time bounds of signals in a recording based on a machine learning model.

- Visualize the audio data as a spectrogram and listen via a web-based playback UI that is synchronized with the spectrogram.

- Validate the predicted annotations and optionally add annotations manually, collaborating with a “crowd” of other human annotators.

- Save annotations from each labeling “round” in a .tsv file.

Resources

Open-source code & open data

- orcasound/aifororcas-podcast (Github)

- Pod.Cast training and test data (metadata in Github wiki, with links to open audio data in AWS/S3)

Collaborators & contributions

In July, 2019, Pod.Cast was developed by Microsoft employees Prakruti Gogia, Akash Mahajan, and Nithya Govindarajan volunteering through Microsoft’s annual hackathon for Orcasound. Since then, the tool has labeled many rounds of open data with help from bioacousticians like Scott Veirs.

- Lead developers

- Prakruti Gogia (2019+, Microsoft*)

- Akash Mahajan (2019+, Microsoft*)

- Other developers

- Nithya Govindarajan (2019, Microsoft*)

- Annotators / beta-testers

- Scott Veirs (2019+, Beam Reach, Orcasound)

- Val Veirs (2020+, Beam Reach, Orcasound)

* Note: this is volunteer-driven & is not an official product of Microsoft.

Support & credits

- Microsoft Garage on Twitter (organizers of MS Hack 2019)

- Watkins Marine Mammal Sound Database, Woods Hole Oceanographic Institution (non-commercial academic or personal use of killer whale un/labeled recordings used to bootstrap model training and annotation for round #1.

- audio-annotator was forked for the frontend code; audio-annotator uses wavesurfer.js for rendering/playing audio.